Artificial intelligence continues to increase in ability and technology. Undress AI is one example that could leave young people open to harm.

Learn what it is so you can better protect your child online.

Summary

- Undress AI is a ‘genre’ of apps that use artificial intelligence to alter images or videos.

- Risks include seeing inappropriate content, bullying, abuse and impacts on mental health.

- Research from Graphika showed a 2000% increase in spam referral links to ‘deepnude’ websites.

- It’s now illegal to generate and distribute ‘intimate’ deepfakes.

- Preventative conversations about undress AI can keep children from getting involved.

- Find more resources to support children’s online safety.

What is ‘undress AI’?

Undress AI describes a type of tool that uses artificial intelligence to remove clothes of individuals in images.

While how each app or website works might vary, all of them offer this similar service. Although the manipulated image isn’t actually showing the victim’s real nude body, it can imply this.

Perpetrators who use undress AI tools might keep the images for themselves or might share them more widely. They could use this images for sexual coercion (sextortion), bullying/abuse or as a form of revenge porn.

Children and young people face additional harm if someone ‘undresses’ them using this technology. A report from the Internet Watch Foundation found over 11,000 potentially criminal AI-generated images of children on one dark web forum dedicated to child sexual abuse material (CSAM). They assessed around 3,000 images as criminal.

The IWF said that it also found “many examples of AI-generated images featuring known victims and famous children.” Generative AI can only create convincing images if it learns from accurate source material. Essentially, AI tools that generate CSAM would need to learn from real images featuring child abuse.

Risks to look out for

Undress AI tools use suggestive language to draw users in. As such, children are more likely to follow their curiosity based on this language.

Children and young people might not yet understand the law. As such, they might struggle to separate harmful tools from those which promote harmless fun.

Inappropriate content and behaviour

The curiosity and novelty of an undress AI tool could expose children to inappropriate content. Because it’s not showing a ‘real’ nude image, they might then think it’s okay to use these tools. If they then share the image with their friends ‘for a laugh’, they are breaking the law likely without knowing.

Without intervention from a parent or carer, they might continue the behaviour, even if it hurts others.

Privacy and security risks

Many legitimate generative AI tools require payment or subscription to create images. So, if a deepnude website is free, it might produce low-quality images or have lax security. If a child uploads a clothed image of themselves or a friend, the site or app might misuse it. This includes the ‘deepnude’ it creates.

Children using these tools are unlikely to read the Terms of Service or Privacy Policy, so they face risk they might not understand.

Creation of child sexual abuse material (CSAM)

The IWF also reported that cases of ‘self-generated’ CSAM circulating online increased by 417% from 2019 to 2022. Note that the term ‘self-generated’ is imperfect as, in most cases, abusers coerce children into creating these images.

However, with the use of undress AI, children might unknowingly create AI-generated CSAM. If they upload a clothed picture of themselves or another child, someone could ‘nudify’ that image and share it more widely.

Cyberbullying, abuse and harassment

Just like other types of deepfakes, people can use undress AI tools or ‘deepnudes’ to bully others. This could include claiming a peer sent a nude image of themselves when they didn’t. Or, it might include using AI to create a nude with features that bullies then mock.

It’s important to remember that sharing nude images of peers is both illegal and abusive.

How widespread is ‘deepnude’ technology?

Research shows that usage of these types of AI tools is increasing, especially to remove clothes from female victims.

One undress AI site says that their technology was “not intended for use with male subjects.” This is because they trained the tool using female imagery, which is true for most of these types of AI tools. With the AI-generated CSAM that the Internet Watch Foundation investigated, 99.6% of them also featured female children.

Research from Graphika highlighted a 2000% increase of referral link spam for undress AI services in 2023. The report also found that 34 of these providers received over 24 million unique visitors to their websites in one month. They predict “further instances of online harm,” including sextortion and CSAM.

Perpetrators will likely continue to target girls and women over boys and men, especially if these tools mainly learn from female images.

What does UK law say?

Until recently, those creating sexually explicit deepfake images were not breaking the law unless the images were of children.

However, the Ministry of Justice announced a new law this week that will change this. Under the new law, those creating sexually explicit deepfake images of adults without their consent will face prosecution. Those convicted will also face an “unlimited fine.”

This contradicts a statement made in early 2024. It said that creating a deepfake intimate image was “not sufficiently harmful or culpable that it should constitute a criminal offence.”

As recently as last year, perpetrators could create and share these images (of adults) without breaking the law. However, the Online Safety Act made it illegal to share AI-generated intimate images without consent in January 2024.

Generally, this law should cover any image that’s sexual in nature. This includes those that feature nude or partially nude subjects.

One thing to note, however, is this law relies on the intention to cause harm. So, a person who creates a sexually explicit deepfake must do so to humiliate or otherwise harm the victim. The problem with this is that it is fairly difficult to prove intent. As such, it might be difficult to actually prosecute parties creating sexually explicit deepfakes.

How to keep children safe from undress AI

Whether you’re concerned about your child using undress AI tools or becoming a victim, here are some actions to take to protect them.

Have the first conversation

Over one-quarter of UK children report seeing pornography by age 11. One in ten say the first saw porn at 9. Curiosity might lead children to also seek out undress AI tools. So, before they get to that point, it's important to talk about appropriate content, positive relationships and healthy behaviours.

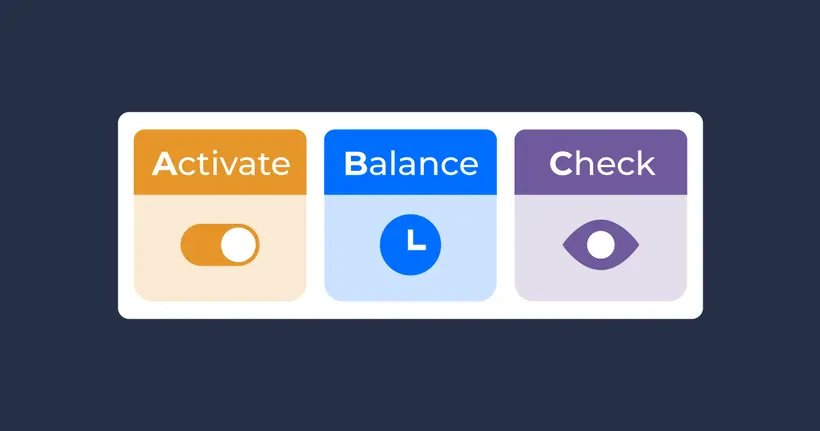

Set website and app limits

Block websites and set content restrictions across broadband and mobile networks as well as devices and apps. This will reduce the chance of them stumbling upon inappropriate content as they explore online. Accessing these websites can be a part of a broader conversation with you.

Build children's digital resilience

Digital resilience is a skill that children must build. It means they can identify potential online harm and take action if needed. They know how to report, block and think critically about content they come across. This includes knowing when they need to get help from their parent, carer or other trusted adult.

Resources for further support

Learn more about artificial intelligence and find resources to support victims of undress AI.

Close video

Close video

Close video

Close video

Close video

Close video

Close video

Close video

Close video

Close video