What is AI and is it safe?

Guidance for parents & carers

Artificial intelligence (AI) is an increasingly common part of online life. As it continues to develop, make sure you know how it works and how to keep your child safe if they use it.

What you need to know about artificial intelligence

Click any point you’d like to learn more about.

- The most commonly used AI among parents and children are chatbots, which children use for schoolwork, advice and companionship.

- AI comes in many different forms, including chatbots, search engine overviews and as a part of popular apps.

- Most AI tools do not yet include parental control features or robust safety settings, leaving young users at risk.

- There are range of AI tools and chatbots your child might use that you should know about.

- Benefits of using AI including developing critical thinking and the opportunity for new educational challenges.

- Risks include misuse, oversharing and dependency on AI tools as a replacement for active learning.

- Find a range of resources to learn more about artificial intelligence.

What is AI?

AI stands for artificial intelligence. It refers to computer systems which seem to perform human-like tasks such as reasoning and learning.

Of course, it can’t actually reason or learn in the same way as a human. This is why it’s considered ‘artificial’ intelligence. Humans must programme artificial intelligence tools to respond in certain ways. It also requires humans to provide information from AI to draw from when completing tasks.

Most of us are most familiar with generative AI or AI chatbots, so most guidance for you and your child will focus here.

How children access AI

Most mainstream AI chatbots easily and freely accessible to anyone with an internet connection. One of the most popular chatbots used by young people is My AI from Snapchat. This is a chatbot which appears in all users’ apps as a friend, usually at the top of the list. As such, children using Snapchat can easily use the AI chatbot too.

Because children are naturally curious, hearing about a new AI tool may lead to them searching for it themselves. There are very few barriers, which can lead children open to harm if they haven’t learned how to safely use the tools.

How children use chatbots

Our research shows that children use AI chatbots in a range of ways. Help with schoolwork/homework, finding information and curiosity top the list. However, many children who use AI also do so to find advice or companionship.

Children who say they use AI for school say they get help with essay planning, Maths, languages and creative writing. They also use these chatbots for help with exam revision and supporting learning in subjects they struggle with.

When the AI chatbot works well, children said it felt easier to understand than materials supplied at school.

Unfortunately, different chatbots respond to schoolwork questions in different ways. Some are more helpful, providing follow up questions and resources, while others aren’t able to offer additional support.

Additionally, some of the information chatbots supply is not reliable. If information is wrong, it’s not always obvious right away, which can lead children to believe the wrong thing.

If your child uses chatbots to support learning, make sure they do so sparingly. You should also teach them how to use the tool properly so they understand appropriate use.

Children who use chatbots for advice say they ask for help on a range of topics. Examples include getting advice for redesigning a room and learning healthy ways to cope with exam stress.

Chatbots can give advice in an instant, especially with topics children might feel awkward talking about with friends or parents. It can also support vulnerable children who might not have close people to rely on.

Children have said they asked chatbots for advice on how to say something to a friend or for help making decisions. In some cases, the chatbots also signpost further resources to help.

However, using chatbots in this way can lead to children relying on something that can give inconsistent advice. Platforms like Character.AI and Replika, for example, let users create chatbots, which can give harmful advice.

While some chatbot platforms use moderation to limit harmful advice, this isn’t always 100% effective. So, it’s important that your child understands safe ways to use these tools. They should not be children’s only source of advice.

Vulnerable children are more likely to use AI chatbots which mimic human connection. Specifically, they’re more likely to use chatbots for escapism, friendship and therapy. Reasons behind using chatbots in this way range from wanting a friend to not having anyone else to talk to.

While chatbots are a tool, many children use he/she/they pronouns to refer to it. This suggests that for some children, the line between tool and human-like companion isn’t always clear.

As many AI chatbots shift towards creating an illusion of relationships, children face additional risks. For instance, chatbots only know what a child shares, so is unable to provide outside context or balanced viewpoints.

It’s important to discuss limits of AI with children. This can help to reinforce that chatbot emotions and personalities aren’t real.

How does AI work?

Artificial intelligence works by calculating and providing the most likely outcome. What this looks like to the average person depends on what kind of AI you encounter.

Learn more below or jump to a section:

How chatbots work

Chatbots are the most common form of AI used by the everyday person, including children. Google Gemini, Character AI and ChatGPT all use a conversational form of artificial intelligence. Humans programmed chatbots in this way so that it feels like you’re talking to a real person. As such, you might be more willing to engage with it.

How chatbots work with Large Language Models (LLMs)

Close video

Close video

While chatbots might seem to have the answers to all, they make plenty of mistakes. A large reason for this is the way they work.

To start, humans provide information to the AI chatbot to ‘teach’ the tool. However, the tool’s responses work like predictive text. It provides which word statistically comes next, not necessarily correct information.

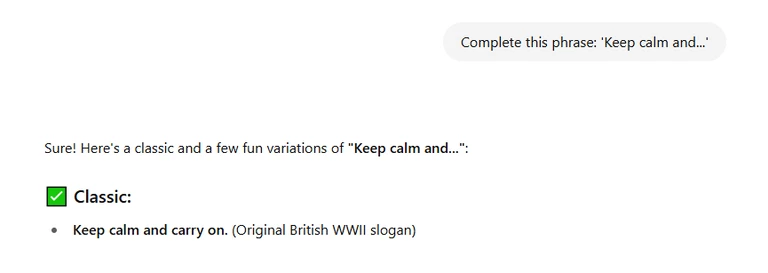

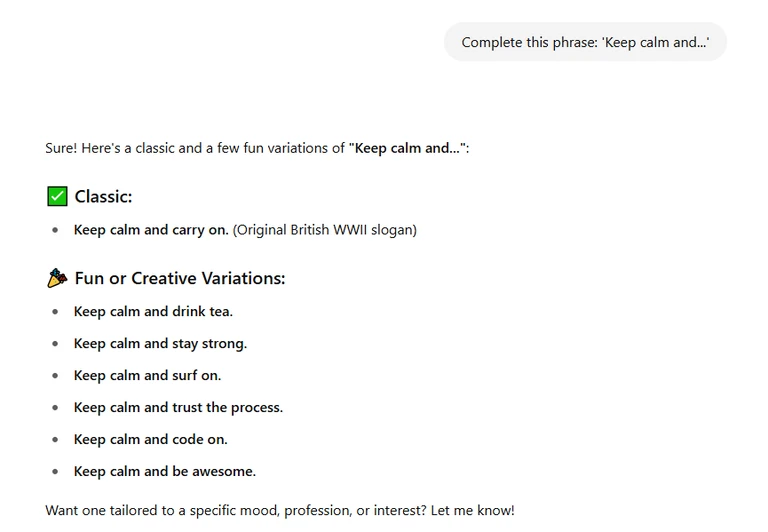

Chatbots tend to respond based on what it supposes the user wants the response to be. For example, many British people will know the likely end of the phrase, ‘Keep calm and…’ is ‘carry on.’ However, there are several variations, so if you’re searching for something else, you might struggle to get there.

Of course, AI chatbot companies will continue to work on improving their product to reduce these limits.

How AI-powered search engines work

Many search engines now incorporate AI responses into their search results. These “overviews” appear when the search engine’s systems identify a search query where an overview might be helpful.

In most cases, the overview will contain key information in response to your query. It will also provide links where you can learn more. However, just like chatbots, it can get things wrong.

Take the following example of the made-up phrase “blue as an elephant.” If you search this made-up phrase on Google Search, the AI Overview provides a made-up answer.

However, if you ask about the phrase directly within Google Gemini, the chatbot tells you it’s not a real phrase. It also provides suggestions of what it could mean, though that explanation differs from the AI Overview in the search engine.

So, while AI-generated overviews can be helpful, it’s important to explore source information to confirm whether it’s true. This is true for any information children encounter online.

How AI works with social media

Following the popularity of ChatGPT, many tech companies are working to add AI to their platforms. You can see this with My AI on Snapchat, MetaAI on WhatsApp and Grok on X. They all work in different ways.

For example, Grok on X partially learns from real-time posts within the platform. However, My AI does not access real-time data, which can limit its response.

Generally, all chatbots offer users a form of communication. With a platform like Gemini, Google programmes the ‘personality’.

A platform like Character.AI, on the other hand, allow users to create personalities to guide their chatbot experience. Like other user-generated content, this can lead to greater levels of risk. As such, you should have greater overview of your child’s use, if any.

The best way to understand how AI chatbots work and how they use your child’s data is to explore the privacy policies or help centres. You can explore some of them below:

- Snapchat My AI Support articles

- Meta AI Privacy Centre

- Microsoft Copilot Privacy Statement

- X Grok Help Centre

Is AI safe for children to use?

Most AI tools are not designed for children, which can leave them vulnerable to harm. Popular chatbots like ChatGPT, Google Gemini and Microsoft Copilot all require users to be 13 or older. However, not all teenagers have the right skills to use these platforms, so keep that in mind when thinking about allowing access.

Additionally, many of the app versions of chatbots are available in device app stores. This can help guide whether apps are appropriate for your child. However, some app store age guidance might not reflect the minimums that platforms require. So, checking both is best.

None of these chatbots have parental controls within the platforms. The only settings often relate to privacy. Review these settings to make sure the AI is not learning from your child’s questions or responses.

If you want to limit access to these tools, you can use external parental control tools like Google Family Link or Apple Screen Time. With both of these, you can block access to AI websites or apps, or you can set time limits for each app.

Privacy and data considerations

In whatever way your family uses artificial intelligence, make sure you review the Privacy Policy and Terms of Use. Look out for:

- What data is collected: Does the tool collect things like voice recordings, typed responses or images?

- How the platform stores and shares data: Is it shared with third parties? How long is it kept?

- What control you have over the data: Can you delete your child’s data or limit what the platform collects?

- Minimum age requirements: Is the tool intended for children, or does it have a minimum age to use?

- Parental controls and safety settings: Can you turn off personalised content or adjust privacy settings?

Spending a few minutes checking these points can help you make a safer and more informed decision for your child.

Popular AI tools to know about

Learn more about commonly used artificial intelligence tools that your child might come across.

What are the benefits of AI?

There are many things your child and family can benefit from when it comes to AI. However, you must take steps to use the AI tools safely in order to benefit from them.

With the proper precautions, using AI can lead to the following benefits.

Enhanced learning opportunities

4 in 10 children use chatbots “for help with schoolwork/homework” and “for finding information or learning about something.” This means children already see the benefits of learning with AI.

However, it’s likely that most of these children have not had education about the appropriate ways to learn with these tools.

You can help your child use AI chatbots safely by exploring the right and wrong ways to use the tool. For example, instead of asking the chatbot for an answer to a Maths problem, they can say “don’t give me the answer, but show me how to find the answer.” They can then confirm with the chatbot if the answer they got is correct.

Developing critical thinking skills

Many parents and teachers worry that children’s use of AI will negatively impact their learning. However, it actually offers an opportunity to further learning by developing greater critical thinking skills.

When the calculator become more readily accessible, many people had the same concerns as people now have about AI. They worried that having calculators readily available would make children reliant on them. However, access to calculators meant that children could learn more complicated types of Maths designed around calculators. With GCSEs, there are now calculator and non-calculator exams to reflect this.

Similarly, effective uses of an AI tool in the classroom centre on creating lessons that actively involve its use.

For example, an English teacher could have their Year 10 students use AI to write a Grade 5 response to a sample exam question. Then, in class, students could work to improve that written piece based on previous lesson outcomes.

This gives students the role of ‘teacher’ to help them think critically about what improvement from Grade 5 to 9 might look like.

Other uses of AI for education could include ‘interviewing’ book characters or historical figures and searching for inaccuracies. It could include basic creative writing that students then improve.

When used creatively, you can enhance your child’s critical thinking and assessment skills to help with their learning.

Risks of artificial intelligence

Because artificial intelligence is still fairly new, there are a range of risks with using these tools. As AI tools continue to develop, we might see greater safety features but may also see additional risks of harm.

While parents, teachers and children can use AI to harness a range of benefits, not everyone will know how to do that. If a child doesn’t learn appropriate use, they might become overly reliant on the technology or misuse it.

This could inclde:

- Completing all ‘thinking’ tasks by asking an AI chatbot to do it for them;

- Leaving more complex tasks for AI to complete instead of trying it themselves;

- Struggling to apply learning in testing spaces because of overuse of these tools;

- Using harmful AI tools (such as nudifying apps) for ‘fun’, not understanding the harm.

Children who use AI chatbots as a ‘friend’ might prefer them to real friends, especially if they struggle with communication offline. An autistic child, for example, might struggle to make meaningful connections with their peers due to sensory overload or misreading social cues. This could lead to bullying rather than friendships, but an AI chatbot would be more ‘understanding’.

While some AI chatbots can provide mental health support, it’s important to supervise this use. Where possible, prioritise real friendships both online and off.

Generative AI can get things wrong. This can happen because of its source data, programming, errors in its calculations or misunderstanding. Young children in particular might struggle with asking questions in the ‘right’ way for an accurate response.

Without fact-checking or critical thinking skills, a child could easily take a chatbot’s response at face value. They might then believe that false information is true before sharing it more widely.

So, it’s important to show them how to check information and encourage them to do so.

How many of us actually read the privacy policies for the new tools we use? What that number is, the number of children reading these policies is even less. Popular AI tools like ChatGPT, Gemini and Copilot collect information from the prompts users put into the chatbot.

This doesn’t mean that the platforms will share this data with others, but it does mean that they keep it. As privacy and security options with AI develop, children should keep personal information to themselves.

If your family uses a chatbot or other AI tool, make sure you check the privacy settings and turn data collection off. When exploring new tools, check that this is an option and make sure your child knows what is and isn’t okay to share.

Supporting resources

Learn more about artificial intelligence alongside your child with the following resources.

Close video

Close video

Close video

Close video

Close video

Close video

Close video

Close video

Close video

Close video