Children and young people might not yet understand the law. As such, they might struggle to separate harmful tools from those which promote harmless fun.

Inappropriate content and behaviour

The curiosity and novelty of an undress AI tool could expose children to inappropriate content. Because it’s not showing a ‘real’ nude image, they might then think it’s okay to use these tools. If they then share the image with their friends ‘for a laugh’, they are breaking the law likely without knowing.

Without intervention from a parent or carer, they might continue the behaviour, even if it hurts others.

Privacy and security risks

Many legitimate generative AI tools require payment or subscription to create images. So, if a deepnude website is free, it might produce low-quality images or have lax security. If a child uploads a clothed image of themselves or a friend, the site or app might misuse it. This includes the ‘deepnude’ it creates.

Children using these tools are unlikely to read the Terms of Service or Privacy Policy, so they face risk they might not understand.

Creation of child sexual abuse material (CSAM)

The IWF also reported that cases of ‘self-generated’ CSAM circulating online increased by 417% from 2019 to 2022. Note that the term ‘self-generated’ is imperfect as, in most cases, abusers coerce children into creating these images.

However, with the use of undress AI, children might unknowingly create AI-generated CSAM. If they upload a clothed picture of themselves or another child, someone could ‘nudify’ that image and share it more widely.

Cyberbullying, abuse and harassment

Just like other types of deepfakes, people can use undress AI tools or ‘deepnudes’ to bully others. This could include claiming a peer sent a nude image of themselves when they didn’t. Or, it might include using AI to create a nude with features that bullies then mock.

It’s important to remember that sharing nude images of peers is both illegal and abusive.

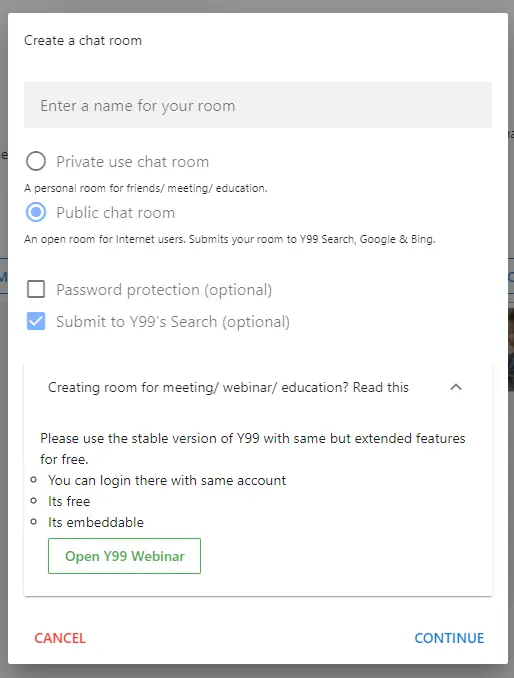

Users can also create their own public or private chat rooms. They can choose to add a password or untick the option to ‘submit to Y99’s Search’.

Users can also create their own public or private chat rooms. They can choose to add a password or untick the option to ‘submit to Y99’s Search’.