Video sharing platforms are a key part of digital life.视频共享平台是数字生活的关键部分。 You can read our response to Ofcom's consultation on how to regulate these platforms here.您可以在此处阅读我们对Ofcom有关如何规范这些平台的咨询的回复。

Internet事务很高兴参加此欢迎的咨询。 We have a few introductory comments which frame our thinking before we get into the specific questions.在介绍具体问题之前,我们有一些介绍性意见构成了我们的思想。 Internet Matters exists to help families benefit from connected technology.存在互联网事务以帮助家庭从互联技术中受益。 We are a not for profit, funded by the internet industry – and we are pleased to bring leading brands together to focus on child safety and digital wellbeing.我们是由互联网行业提供资金的非营利组织,我们很高兴将领先品牌聚集在一起,专注于儿童安全和数字健康。 We provide expert advice to parents, presented in a really usable way, by age of the child, by device, app, or platform or by issue.我们会根据孩子的年龄,设备,应用或平台或问题,以切实可行的方式向父母提供专家建议。

We know that engaging with our content gives parents, carers and increasingly professionals the confidence and tools they need to engage with the digital lives of those they care for.我们知道,与我们的内容互动可以使父母,照护者和越来越多的专业人士获得与他们关心的人的数字生活互动所需的信心和工具。 Having an engaged adult in a child's life is the single most important factor in ensuring they are safe online, so providing those adults with the tools, resources, and confidence to act is a fundamental part of digital literacy.确保孩子们上网安全是确保孩子安全上网的最重要因素,因此,为这些成年人提供工具,资源和行为能力是数字素养的基本组成部分。

AVMSD源于以“原产国”原则为基础的欧盟法律体系。 In practice, this means as the consultation document states, the interim regulatory framework will on cover 6 mainstream sites and 2 adult content sites.实际上,正如咨询文件所指出的那样,这意味着临时监管框架将覆盖XNUMX个主流站点和XNUMX个成人内容站点。 This will prove very hard to explain to parents who could rightly expect that if the content is viewable in the UK it is regulated in the UK.这将很难解释给父母,他们可以正确地期望,如果该内容在英国可以观看,那么该内容将在英国受到监管。

While acknowledging the limitations of the AVMSD, the consultation repeatedly notes that to the extent the forthcoming Online Harms legislation will address age-inappropriate content, it will not feel bound to honour the country of origin principle.磋商会认识到了AVMSD的局限性,但一再指出,在即将到来的在线危害立法将解决年龄不适当的内容的范围内,它不会感到必须遵守原籍国原则。 This is a position we endorse.我们赞成这一立场。 If the content is viewable in the UK, then it should conform with UK rules.如果内容在英国可见,则应符合英国规则。

AVMSD不限于这种方式。 In this case the AVMSD has got it right.在这种情况下,AVMSD正确无误。 It makes no sense to limit the scope of the Online Harms legislation to platforms which allow user generated content to be published.将在线危害立法的范围限制在允许发布用户生成的内容的平台上是没有道理的。 What matters is the nature of the content, not how or by whom it was produced.重要的是内容的性质,而不是内容的生产方式或来源。

Question 19: What examples are there of effective use and implementation of any of the measures listed in article 28(b)(3) the AVMSD 2018?问题XNUMX:有效使用和实施AVMSD XNUMX第XNUMX(b)(XNUMX)条所列任何措施的例子有哪些? The measures are terms and conditions, flagging and reporting mechanisms, age verification systems, rating systems, parental control systems, easy-to-access complaints functions, and the provision of media literacy measures and tools.这些措施包括条款和条件,标记和报告机制,年龄验证系统,评级系统,父母控制系统,易于使用的投诉功能以及提供媒体素养措施和工具。 Please provide evidence and specific examples to support your answer.请提供证据和具体示例以支持您的回答。

Our work listening to families informs everything we do – and given we are a key part of delivering digital literacy for parents we wanted to share some insights with you.我们倾听家人的工作为我们所做的一切提供了信息–鉴于我们是为父母提供数字素养的关键部分,我们希望与您分享一些见解。 Parents seek advice about online safety when one of four things happen:当发生以下四种情况之一时,家长会寻求有关在线安全的建议:

Parents seek help most often through an online search or asking for help at school.父母最常通过在线搜索或在学校寻求帮助来寻求帮助。 Clearly throughout lockdown, searching for solutions has been more important, meaning evidenced-based advice from credible organisations must be at the top of the rankings.显然,在整个锁定过程中,寻找解决方案更为重要,这意味着来自可靠组织的基于证据的建议必须居于首位。

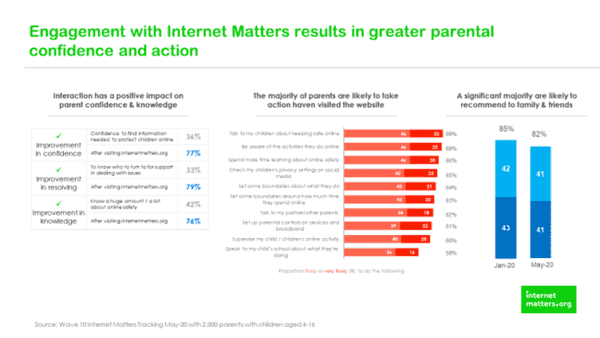

Once parents are engaged with advice it has to be easy to understand – and so we regularly poll parents on what they would think talk and do differently after engaging with our website.父母征求意见后,必须易于理解-因此,我们会定期与父母进行一次民意测验,询问他们在与我们的网站互动后会说些什么,以及他们会做什么。 The charts below demonstrate that serving parents content that meets their requirements drives meaningful and measurable change.下图显示,为父母提供的满足他们要求的内容可以带来有意义且可衡量的变化。

These data points indicate that digital literacy amongst parents can and is influenced by good quality resources – which equip them to have routine conversations with their children about their digital lives.这些数据表明,父母之间的数字素养能够而且受高质量资源的影响-这些资源使他们能够与子女进行有关其数字生活的例行对话。 Moreover, our pages on parental controls consistently rank in the top 10 most popular pages.此外,我们在家长控制方面的页面始终排在最受欢迎的前XNUMX名页面中。

Question 20: What examples are there of measures which have fallen short of expectations regarding users' protection and why?问题XNUMX:有哪些措施未能达到用户保护的预期?为什么? Please provide evidence to support your answer wherever possible.请提供证据以尽可能支持您的回答。

We have to conclude that moderation of live streaming is not working currently and perhaps cannot work, abuse of platforms terms and conditions happens in real-time.我们必须得出的结论是,实时流媒体的管理目前无法正常工作,甚至可能无法正常工作,平台条款和条件的滥用是实时发生的。 In the following two examples it's not simply terms and conditions that were abandoned, it was much more serious.在下面的两个示例中,不仅放弃了简单的条款和条件,更严重的是。 The tragic recent suicide was circulated globally within seconds and although platforms took quick and decisive action too many people saw that harrowing content on mainstream apps, with little or no warning as to graphic content.最近发生的悲剧性自杀事件在几秒钟内在全球范围内流传,尽管平台采取了快速果断的行动,但仍有太多人看到主流应用程序上的内容令人垂涎,而图形内容却很少或没有警告。 As we all know, this wasn't the only example of live streaming moderation failure, as the Christchurch shootings highlighted back in March 2019.众所周知,这不是现场流媒体监控失败的唯一例子,因为克赖斯特彻奇枪击事件早在XNUMX年XNUMX月就凸显出来。

Clearly, these are complex issues where someone deliberately sets out to devastate lives through their own actions and their decision to live stream it.显然,这些都是复杂的问题,有人故意着手通过自己的行动和决定直播生活来破坏生活。 Of course, the two examples are not comparable save in what we can learn from them and what a regulator could meaningfully do in these situations.当然,除了我们可以从中学习到的内容以及监管机构在这些情况下可以做的有意义的事情以外,这两个例子是不可比较的。 Perhaps it is in the very extreme and exceptional nature of this content than comfort can be found – in that in nearly every other circumstance this content is identified and isolated in the moments between uploading and sharing.也许是在这种内容的极端极端性质中,无法找到舒适感–因为几乎在所有其他情况下,在上载和共享之间的瞬间,该内容都被识别和隔离了。 Clearly, these are split second decisions which are reliant on outstanding algorithms and qualified human moderators.显然,这些决定是瞬间决定的,这取决于出色的算法和合格的人工主持人。 Perhaps the role of the regulator in this situation is to work with platforms onto which such content can be or was uploaded and viewed and shared to understand and explore what went wrong and then agree concrete actions to ensure it cannot happen again.在这种情况下,监管机构的作用可能是与可以在上面上传或查看并共享这些内容的平台一起使用,以理解和探索出了什么问题,然后商定具体措施以确保不再发生。 Perhaps those learnings could be shared by the regulator in a confidential way with other platforms, simply for the purpose of ensuring lessons are learnt as widely as possible – for the protection of the public, and where appropriate for the company to provide redress.监管机构也许可以与其他平台以机密的方式分享这些经验,只是为了确保尽可能广泛地吸取教训,以保护公众,并在适当的情况下让公司提供补救。 For that, to work the culture of the regulator and its approach has to be collaborative and engaging rather than remote and punitive.为此,要发挥监管者的文化及其方法的作用,就必须具有协作性和吸引力,而不是遥不可及的惩罚性措施。

监管机构可能希望部署的建议可能包括(但不限于)要求公司制定计划以共同努力以确保在各个平台之间立即共享通知,因为将这些信息保存在一个平台内没有商业优势。

The other issue that requires detailed consideration are comments under videos – be that toddlers in paddling pool, or teenagers lip-synching to music videos.另一个需要详细考虑的问题是视频下的注释-是戏水池中的幼儿,还是青少年对音乐视频进行口型同步。 Perhaps there are two separate issues here.也许这里有两个独立的问题。 For the accounts of young people between 13-16, unless and until anonymity on the internet no longer exists, 'platforms should be encouraged take a cautious approach to comments, removing anything that is reported and reinstating once comment has been validated.'对于XNUMX至XNUMX岁之间的年轻人,除非直到互联网上不再存在匿名性,否则“应该鼓励平台采取谨慎的评论方式,删除举报内容,并在评论通过验证后恢复。”

We would encourage the regulator to continue to work with platforms to identify videos that although innocent in nature, attract inappropriate comments and suspend the ability to comment publicly under them Often account holders have no idea who the comments are being left by and context is everything.我们鼓励监管机构继续与平台合作,以识别虽然本质上是无辜的视频,但会吸引不适当的评论并中止在其下公开发表评论的能力。通常,帐户持有人不知道是谁留下评论,而上下文就是一切。 A peer admiring a dance move, or an item of clothing is materially different from comments from a stranger.欣赏舞蹈动作或穿着一件衣服的同伴与陌生人的评论有本质的不同。

For as long as sites are not required to verify the age of the users, live streams will be both uploaded and watched by children.只要不需要网站来验证用户的年龄,实时流就将被儿童上传和观看。 Children have as much right to emerging technology as anyone else – and have to be able to use it safely.儿童拥有与其他任何人一样的新兴技术权利,并且必须能够安全地使用它。 So, the challenge for the regulator becomes how to ensure children who are live streaming can do so without inappropriate contact from strangers.因此,监管者面临的挑战是如何确保直播中的孩子能够做到这一点,而又不会引起陌生人的不适当接触。

Whilst many young people tell us they like and appreciate the validation they receive from comments, the solution isn't to retain the functionality.尽管许多年轻人告诉我们他们喜欢并欣赏从评论中获得的验证,但解决方案并不是保留功能。 It's to stop it and invest the time and money in understanding what is happening in the lives of our young people that the validation of strangers is so meaningful to them.就是要停止它,并花时间和金钱来了解我们年轻人的生活,以至于对陌生人的确认对他们来说意义非凡。

对于父母在划水池中张贴学步儿童的图像,既有技术上也有教育上的回应。 应该可以只在私人模式下查看图像,以便陌生人无法发表评论。 其次,应该对父母进行教育性活动-可能始于准妈妈和助产士之间的对话,讨论适合在网上发布以供全世界观看的孩子的婴儿寿命。 监管机构可以在挑战已迅速成为常态的表演卷轴生活方式中发挥作用。

Question 21: What indicators of potential harm should Ofcom be aware of as part of its ongoing monitoring and compliance activities on VSP services?问题XNUMX:Ofcom作为VSP服务正在进行的监视和合规活动的一部分,应注意哪些潜在危害指标? Please provide evidence to support your answer wherever possible.请提供证据以尽可能支持您的回答。

在过去的18个月中,Internet Matters已投入大量时间和资源来了解弱势儿童的在线体验,特别是与非弱势儿童的在线体验有何不同。 我们的报告 数字世界中的弱势儿童 2019年XNUMX月发布的数据表明,弱势儿童的在线体验截然不同。 Further research demonstrates that children and young people with SEND are at particular risk as they are less able to critically assess contact risks and more likely to believe people are who they say they are.进一步的研究表明,患有SEND的儿童和年轻人面临的风险特别大,因为他们对批判接触风险的批评能力较弱,并且更有可能相信人们的真实身份。 Likewise, care experience children are more at risk of seeing harmful content, particularly around self-harm and suicide content.同样,有护理经验的孩子更容易看到有害成分,尤其是在自残和自杀成分周围。 There are many more examples.还有更多示例。

The point here is not the vulnerable young people should have a separate experience if they identify themselves to the platforms, but more than the regulator and platforms recognise that there are millions of vulnerable children in the UK who require additional support to benefit from connected technology.这里的要点不是弱势年轻人是否应该在平台上拥有自己的经历,而是监管者和平台认识到的是,英国有数百万弱势儿童需要更多支持才能从互联技术中受益。 The nature of support will vary but will inevitably include additional and bespoke digital literacy interventions as well as better content moderation to remove dangerous content before it is shared.支持的性质各不相同,但不可避免地将包括其他定制的数字扫盲干预措施,以及更好的内容审核,以在共享危险内容之前将其删除。

Youthworks与Internet Matters合作发布的2019年网络调查的数据表明:

已经很脆弱的青少年经常看到关于自我伤害的内容,特别是那些饮食失调的青少年(23%)或有言语障碍的青少年(29%),而没有弱势的年轻人中只有9%的青少年“看到过”,只有2%的人“经常”这样做。

Question 22: The AVMSD 2018 requires VSPs to take appropriate measures to protect minors from content which 'may impair their physical, mental or moral development'.问题XNUMX:AVMSD XNUMX要求VSP采取适当措施,保护未成年人免受``可能损害其身心,道德或道德发展''的内容的侵害。 Which types of content do you consider relevant under this?您认为与此相关的哪些类型的内容? Which measures do you consider most appropriate to protect minors?您认为哪些措施最适合保护未成年人? Please provide evidence to support your answer wherever possible, including any age-related considerations.请提供证据以尽可能支持您的答案,包括任何与年龄有关的注意事项。

除了我们对问题21的回答中详述的自残和自杀内容之外,还有其他几种类型的内容可能会损害儿童的身心,道德发育,包括(但不限于)

Perhaps the way to consider this is by reviewing and updating the content categories the Internet Service Providers use for blocking content through the parental control filters.考虑这一点的方法可能是通过检查和更新Internet服务提供商用于通过家长控制过滤器阻止内容的内容类别。 All user-generated content should be subject to the same restrictions for children.所有用户生成的内容对于儿童均应遵守相同的限制。 What matters to the health and wellbeing of children is the content itself, not whether the content was created by a mainstream broadcaster or someone down the road.对于儿童的健康和福祉而言,重要的是内容本身,而不是内容是由主流广播公司还是未来的某人创作的。

什么措施最适合保护未成年人?

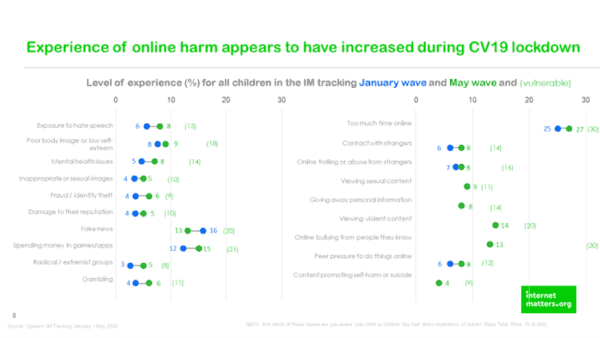

迫切需要这样做,因为我们的数据显示,锁定期间在线危害的体验有所增加。

Question 23: What challenges might VSP providers face in the practical and proportionate adoption of measures that Ofcom should be aware of?问题XNUMX:VSP提供商在切实和按比例采用Ofcom应注意的措施时会面临哪些挑战? We would be particularly interested in your reasoning of the factors relevant to the assessment of practicality and proportionality.我们特别希望您对与实用性和相称性评估相关的因素进行推理。

在此处有一个非常明确的要求的非法内容与存在混乱世界的合法但有害的内容之间的区别可能会很有用

This is a serious and complex problem which will require significant work between the platforms and the regulator to resolve.这是一个严重而复杂的问题,需要平台与监管机构之间进行大量工作才能解决。 Given the Government is minded to appoint Ofcom to be the Online Harms Regulator there will be as much interest in how this is done as in that it is done.鉴于政府有意任命Ofcom担任在线危害监管者,因此与如何做到这一点一样,人们将对此感兴趣。 Precedents will we set, and expectations created.我们将设定先例,并创造期望。

Question 24: How should VSPs balance their users' rights to freedom of expression, and what metrics should they use to monitor this?问题XNUMX:VSP应该如何平衡其用户的表达自由权,以及应使用哪些指标来监控这一点? What role do you see for a regulator?您对监管者的角色是什么?

(请参阅第25段和附件2.32中的第28(b)(7)条)。 Please provide evidence or analysis to support your answer wherever possible, including consideration on how this requirement could be met in an effective and proportionate way.请提供证据或分析以尽可能支持您的回答,包括考虑如何以有效和适当的方式满足此要求。

互联网事务对此问题没有评论

问题26:Ofcom如何最好地支持VSP继续创新以确保用户安全?

问题27:Ofcom如何最好地支持企业遵守新要求?

问题28:您对第2.49段中规定的一套原则(保护和保证,言论自由,随着时间的流逝的适应性,透明度,强有力的执行力,独立性和相称性)以及平衡有时在他们?